Artificial intelligence (AI) systems are becoming increasingly prevalent in our society, influencing decisions and shaping our daily lives in ways we may not even be aware of. However, these systems are not immune to bias and can inadvertently perpetuate inequality and discrimination.

1. Introduction

As the use of AI continues to grow, it is crucial that we uncover and address any biases present in these systems to ensure fairness and equality for all. In this article, we will provide a step-by-step guide to testing AI systems for fairness, helping you uncover bias and create more equitable AI solutions.

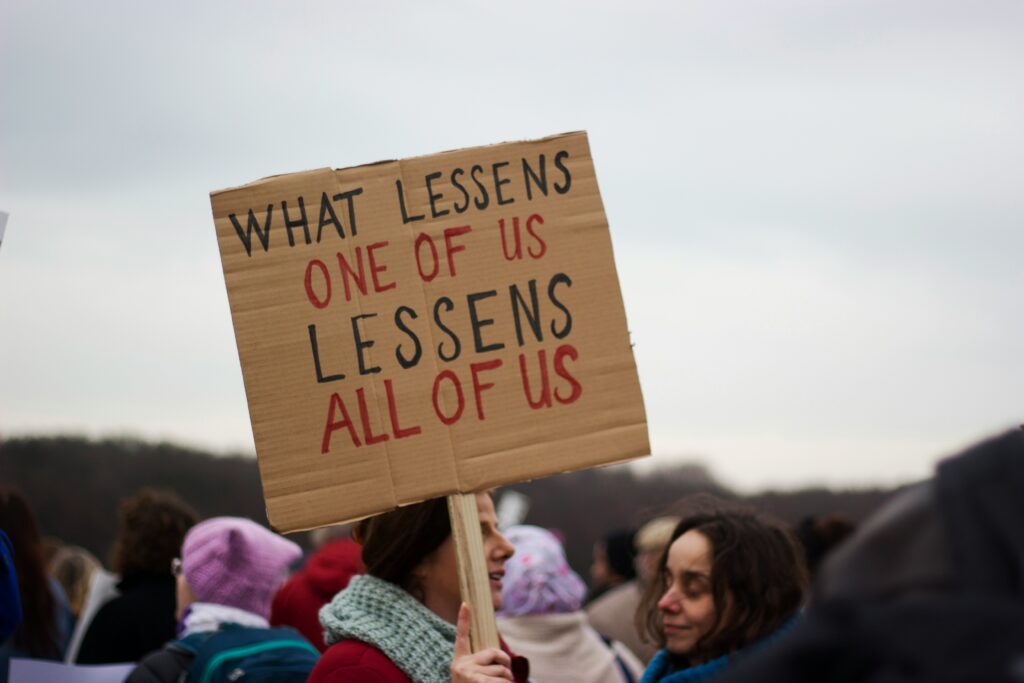

Photo by Ashley Nicole on Unsplash

Understanding the importance of fairness in AI systems

As artificial intelligence (AI) systems become increasingly integrated into various aspects of our lives, it is crucial to recognize the importance of fairness in their design and implementation. AI systems have the potential to make decisions that have far-reaching consequences, ranging from employment and finance to healthcare and criminal justice. Therefore, it is essential to ensure that these systems are fair and unbiased, and do not perpetuate existing inequalities or discriminate against certain groups.

Fairness in AI systems refers to the equitable treatment of individuals or groups, regardless of their race, gender, age, or any other protected characteristic. It aims to prevent bias and discrimination, promoting equal opportunities and outcomes for all individuals. Failure to address bias and ensure fairness in AI systems can lead to unjust decisions and reinforce existing societal disparities.

One of the key challenges in achieving fairness in AI systems is the potential for bias in the data used to train these systems. AI algorithms learn from historical data, and if this data contains biases or reflects existing inequalities, the resulting AI models may perpetuate those biases. For example, if historical hiring data is biased towards certain demographics, an AI-powered hiring system trained on that data may inadvertently discriminate against underrepresented groups.

Recognizing the importance of fairness in AI systems, researchers and technologists have developed various methods and frameworks to test and evaluate the fairness of these systems. These include statistical tests, algorithmic auditing, and model interpretability techniques. By systematically testing AI systems for fairness, we can identify and rectify any biases present in the models and ensure more equitable outcomes.

Ensuring fairness in AI systems goes beyond technical considerations; it also requires a comprehensive understanding of the context in which these systems are deployed. This includes taking into account the societal, ethical, and legal implications of AI applications. It is important to involve diverse stakeholders, including experts from various disciplines, impacted communities, and regulatory bodies, in the process of testing and evaluating AI systems for fairness.

By striving for fairness in AI systems, we can harness the potential of AI to create positive social impacts and promote equitable outcomes. As AI continues to shape our society, it is our collective responsibility to address biases, uncover discrimination, and create fair and just AI systems that serve the needs of all individuals.

Photo by Phil Hearing on Unsplash

Identifying biases in AI systems

Identifying biases in AI systems is a crucial step in ensuring fairness and avoiding discrimination. AI systems are only as unbiased as the data they are trained on, and it is essential to examine the data and algorithms for any biases that may be present. Here are some steps to identify biases in AI systems:

- Data collection and preprocessing: The first step is to carefully collect and preprocess the data that will be used to train the AI system. This includes ensuring a diverse and representative dataset that includes data from various groups and demographics. It is important to be mindful of any biases in the data collection process itself, such as underrepresentation or overrepresentation of certain groups.

- Data analysis: Once the data is collected, it is important to conduct a thorough analysis to identify any biases that may be present. This can involve examining the distribution of data across different demographic groups and identifying if there are any significant differences or imbalances. It is also important to analyze the data for any biases or stereotypes that may be present.

- Algorithm analysis: After analyzing the data, it is necessary to examine the algorithms that will be used in the AI system for any inherent biases. This includes understanding the underlying logic and assumptions of the algorithms and assessing whether they may lead to biased outcomes. It is also important to consider the potential impact of the algorithm on different demographic groups and whether it may disproportionately affect certain individuals or communities.

- Testing and evaluation: Once the AI system is developed, it is crucial to test and evaluate its performance for fairness and bias. This can involve conducting various tests and experiments to assess whether the system is treating all individuals or groups equitably. Statistical tests and algorithmic auditing can be employed to identify and measure any biases that may exist.

- Iterative improvement: Addressing biases in AI systems is an ongoing process. As new data becomes available or new insights are gained, it is important to continually reassess and improve the system to minimize biases. This can involve refining the data collection process, adjusting the algorithms, or implementing additional measures to ensure fairness.

By following these steps and actively addressing biases, AI systems can be developed and deployed in a way that promotes fairness and equal opportunities for all individuals. It is essential to prioritize the identification and mitigation of biases to ensure that AI systems do not perpetuate existing inequalities or discriminate against certain groups.

Photo by Tim Mossholder on Unsplash

Testing for bias: Step-by-step guide

Testing for bias in AI systems is a fundamental step in ensuring fairness and preventing discrimination. By following a systematic approach, developers can identify and address any biases that may be present in their AI models. Here is a step-by-step guide for testing AI systems for bias:

- Define protected attributes: Start by identifying the protected attributes that are relevant to the AI system. These attributes can include race, gender, age, and other characteristics that should not be used as a basis for discrimination.

- Identify potential bias sources: Next, examine the data and algorithms for potential sources of bias. This can involve reviewing the data collection process, assessing the algorithms used, and understanding the underlying assumptions and logic.

- Select appropriate metrics: Choose the metrics that will be used to measure bias in the AI system. Common metrics include disparate impact, equal opportunity, and predictive parity. These metrics help quantify the extent to which the system may be biased against certain groups.

- Conduct statistical analysis: Analyze the data to assess whether there are any statistically significant differences in the outcomes for different demographic groups. This can involve using statistical tests to measure the level of bias and determine whether it is statistically significant.

- Perform fairness tests: Implement fairness tests to evaluate the performance of the AI system. This can involve simulating different scenarios and assessing whether the system is consistently treating all individuals or groups in a fair and equitable manner.

- Interpret the results: Interpret the results of the testing to gain insights into the presence and extent of bias in the AI system. This can involve identifying patterns or trends that indicate potential biases and understanding their implications.

- Mitigate biases: Once biases are identified, take steps to mitigate their impact. This can involve revisiting the data collection process, retraining the model with a more diverse and representative dataset, or adjusting the algorithms to reduce bias.

- Monitor and evaluate: Continuously monitor and evaluate the AI system to ensure that biases are being effectively addressed. Regularly assess the system’s performance and make improvements as needed.

By following this step-by-step guide, developers can proactively test AI systems for bias and work towards creating more fair and equitable technology. It is essential to prioritize the testing and mitigation of biases to prevent the perpetuation of discrimination and inequalities in AI systems.

Photo by Alexander Lam on Unsplash

Mitigating biases in AI systems

Mitigating biases in AI systems is a crucial step in creating technology that is fair and equitable. It involves taking proactive measures to reduce and eliminate biases that may be present in the data, algorithms, or decision-making processes. Here are some strategies for mitigating biases in AI systems:

- Diverse and representative data: One of the key ways to mitigate biases is by ensuring that the training data used to develop AI models is diverse and representative. This means including data from a wide range of sources and demographics, and avoiding data that may be biased or skewed. By using diverse data, AI systems can learn to make decisions that are fair and unbiased.

- Regular bias assessment: It is important to regularly assess AI systems for biases and evaluate their performance. This can involve conducting audits and reviews to identify any biases that may have emerged, and taking corrective action to address them. Regular monitoring and evaluation help to ensure that biases are being effectively mitigated.

- Algorithmic transparency: Transparency in algorithms is crucial for detecting and mitigating biases. Developers should strive to make their algorithms transparent and understandable, so that biases can be identified and corrected. By providing explanations and justifications for the decisions made by AI systems, biases can be detected and addressed more effectively.

- Bias testing and evaluation: Implementing rigorous bias testing and evaluation processes can help in identifying and mitigating biases. This can involve conducting sensitivity analyses to understand how changes in the input data affect the outcomes, and exploring different scenarios to ensure that the AI system is treating all individuals and groups fairly.

- Regular retraining and updating: AI systems should be regularly retrained and updated with new data to ensure that they remain unbiased and up-to-date. As societal norms and values evolve, biases can emerge or change. By continuously updating the training data and retraining the models, biases can be mitigated and the system can adapt to changing circumstances.

- Ethical guidelines and codes of conduct: Establishing ethical guidelines and codes of conduct for the development and deployment of AI systems can help mitigate biases. These guidelines should address issues such as fairness, transparency, accountability, and inclusivity. By adhering to these guidelines, developers can ensure that their AI systems are designed and used in an ethical and responsible manner.

Mitigating biases in AI systems is an ongoing process that requires continuous effort and vigilance. By implementing these strategies and prioritizing the mitigation of biases, developers can contribute to the creation of technology that is fair, unbiased, and equitable.

Photo by Amy Elting on Unsplash

The Role of Transparency and Accountability in Mitigating Bias

Transparency and accountability play a crucial role in mitigating bias in AI systems. By ensuring that AI systems are transparent and accountable, developers can identify and address biases that may be present in the data, algorithms, or decision-making processes. Here are some ways in which transparency and accountability can contribute to the mitigation of bias:

- Explainability of AI systems: It is important for developers to make AI systems more explainable and provide clear explanations of how they work. This allows users and stakeholders to understand the underlying processes and identify any potential biases. By understanding how the system makes decisions, it becomes easier to detect and address biases.

- Ethical guidelines and standards: Establishing ethical guidelines and standards for the development and deployment of AI systems helps ensure transparency and accountability. These guidelines can outline best practices for mitigating bias and provide a framework for addressing potential biases. Adhering to these guidelines can help developers identify and rectify biases in AI systems.

- Auditing and third-party assessments: Regular audits and assessments of AI systems by independent third parties can help ensure transparency and accountability. These assessments can identify any biases or discriminatory patterns in the system and provide recommendations for improvement. By involving external experts, developers can gain valuable insights into potential biases that may have been overlooked.

- User feedback and input: Allowing users to provide feedback and input on AI systems can enhance transparency and accountability. Users can report any biases or discriminatory outcomes they experience, providing developers with valuable information for addressing bias. By actively seeking user feedback, developers can ensure that biases are identified and corrected in a timely manner.

- Bias impact assessments: Conducting regular bias impact assessments can help developers understand how biases may affect different individuals or groups. These assessments can reveal any biases that have been unintentionally introduced into the system and guide efforts to mitigate them. By understanding the potential impact of biases, developers can take proactive measures to address them.

- Regular monitoring and evaluation: Transparency and accountability require developers to continuously monitor and evaluate AI systems for biases. This involves analyzing the outcomes of the system and assessing whether they are fair and unbiased. By regularly monitoring the system, developers can identify any biases that may have emerged over time and take corrective action.

Transparency and accountability are essential aspects of mitigating bias in AI systems. By ensuring that AI systems are transparent, explainable, and subject to regular audits and assessments, developers can enhance their ability to detect and address biases. Additionally, by involving users in the feedback process and conducting bias impact assessments, developers can ensure that AI systems are fair, unbiased, and accountable to all individuals and groups.

Photo by micheile henderson on Unsplash